execute actions.

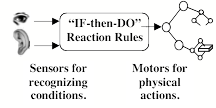

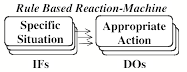

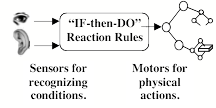

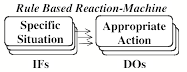

What could be in that central knowledge box? Let’s begin with the simplest case: suppose that we already know, in advance, all the situations our robot will face. Then all we need is a catalog of simple, two-part “If–>Do” rules—where each If describes one of those situations—and its Do describes which action to take. Let’s call this a “Rule-Based Reaction-Machine.”

If temperature wrong, Adjust it to normal.

If you need some food, Get something to eat.

If you’re facing a threat, Select some defense.

If an active sexual drive, Search for a mate.

Many If–>Do rules like these are born into each species of animals. For example, every infant is born with ways to maintain its body temperature: when too hot, it can pant, sweat, stretch out, and vasodilate, when too cold, it can retract its limbs or curl up, shiver, vasoconstrict, or otherwise generate more heat. [See §6-1.2.] Later in life we learn to use actions that change the external world.

If your room is too hot, Open a window.

If too much sunlight, Pull down the shade.

If you are too cold, Turn on a heater.

If you are too cold, Put on more clothing.

This idea of a set of “If–>Do rules” portrays a mind as nothing more than a bundle of separate reaction-machines. Yet although this concept may seem too simplistic, in his masterful book, The Study of Instinct,[4] Nikolaas Tinbergen showed that such schemes could be remarkably good for describing some things that animals do. He also proposed some important ideas about what might turn those specialists on and off, how they accomplish their various tasks, and what happens when some of those methods fail.

Nevertheless, no structure like this could ever support the intricate feelings and thoughts of adults—or even of infants. The rest of this book will try to describe systems that work more like human minds.

???????????????????? §1-5. Seeing a Mind as a Cloud of Resources.

Today, there are many thinkers who claim that all the things that human minds do result from processes in our brains—and that brains, in turn, are just complex machines. However, other thinkers still insist that there is no way that machines could have the mysterious things we call feelings.

Citizen: A machines can just do what it’s programmed to do, and then does it without any thinking or feeling. No machine can get tired or bored or have any kind of emotion at all. It cannot care when something goes wrong and, even when it gets things right, it feels no sense of pleasure or pride, or delight in those accomplishments.

Vitalist: That’s because machines have no spirits or souls, and no wishes, ambitions, desires, or goals. That’s why a machine will just stop when it’s stuck—whereas a person will struggle to get something done. Surely this must be because people are made of different stuff; we are alive and machines are not.

In older times, those were plausible views because we had no good ideas about how biological systems could do what they do. Living things seemed completely different from machines before we developed modern instruments. But then we developed new instruments—and new concepts of physics and chemistry—that showed that even the simplest living cells are composed of hundreds of kinds of machinery. Then, in the 20th century, we discovered a really astonishing fact: that the ‘stuff’ that a machine is made of can be arranged so that its properties have virtually no effect upon the way in which that machine behaves!

Thus, to build the parts of any machine, we can use any substance that’s strong and stable enough: all that matters is what each separate part does, and how those parts are connected up. For example, we can make different computers that do the same things, either by using the latest electrical chips— or by using wood, string and paper clips—by arranging their parts so that, seen from outside, each of them does the same processes. [See §§Universal Machines.]

This relates to those questions about how machines could have emotions or feelings. In earlier times, it seemed to us that emotions and feelings were basically different from physical things—because we had no good ways to imagine how there could be anything in between. However, today we have many advanced ideas about how machines can support complex processes—and the rest of this book will show many ways to think of emotions and feelings as processes.

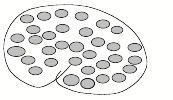

This view transforms our old questions into new and less mysterious ones like, “What kinds of processes do emotions involve,” and, “How could machines embody those processes?” For then we can make progress by asking about how such a brain could support such processes— and today we know that every brain contains a great many different parts, each of which does certain specialized jobs. Some can recognize various patterns, others can supervise various actions, yet others can formulate goals or plans, and some can engage large bodies of knowledge. This suggests a way to envision a mind (or a brain) as made of hundreds or thousands of different resources.

At first this image may seem too vague—yet, even in this simple form, it suggests how minds could change their states. For example, in the case of Charles’s infatuation, this suggests that some process has switched off some resources that he normally uses to recognize someone else’s defects. The same process also arouses some other resources that tend to replace his more usual goals by ones that he think Celia wants him to hold.

Similarly, the state we call Anger appears to select a set of resources that help you react with more speed and strength—while also suppressing some other resources that usually make you act prudently; Anger replaces cautiousness with aggressiveness, trades empathy for hostility, and makes you plan less carefully.

More generally, this image suggests that there are some ‘Selectors’ built into our